Update README Formatting

Browse files- README.md +20 -24

- configs/metadata.json +2 -1

- docs/README.md +20 -24

README.md

CHANGED

|

@@ -5,14 +5,13 @@ tags:

|

|

| 5 |

library_name: monai

|

| 6 |

license: apache-2.0

|

| 7 |

---

|

| 8 |

-

#

|

| 9 |

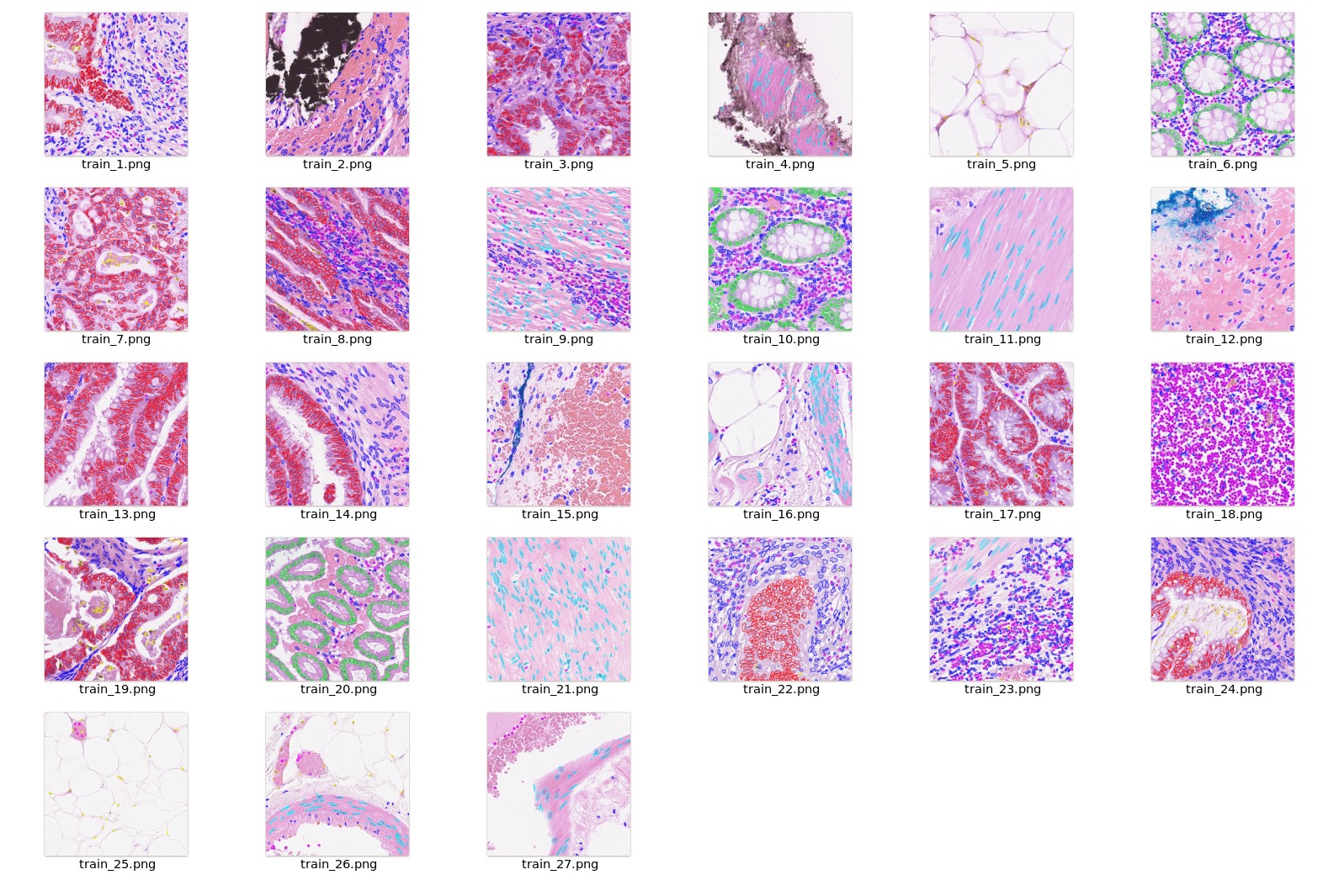

A pre-trained model for segmenting nuclei cells with user clicks/interactions.

|

| 10 |

|

| 11 |

|

| 12 |

|

| 13 |

|

| 14 |

|

| 15 |

-

# Model Overview

|

| 16 |

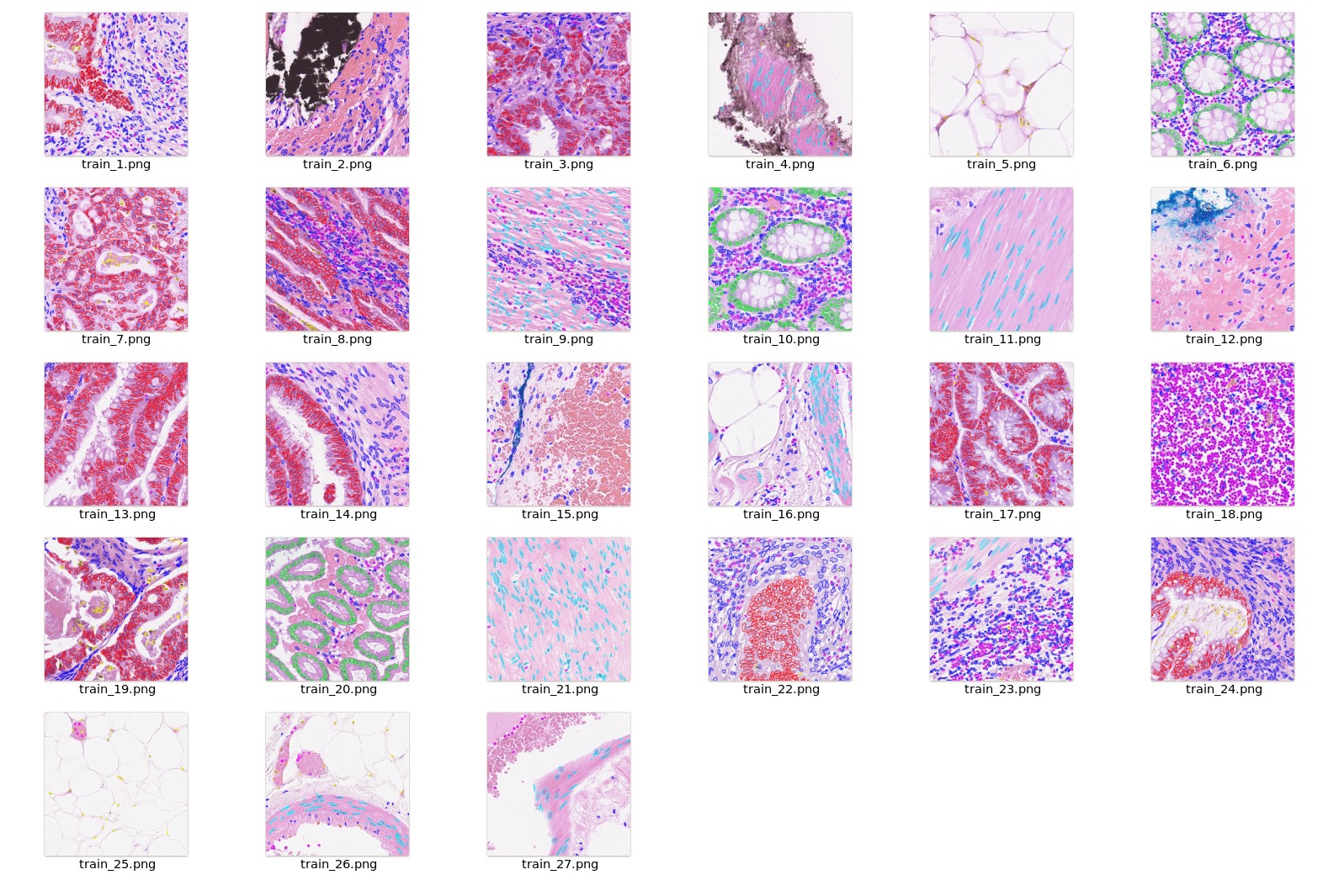

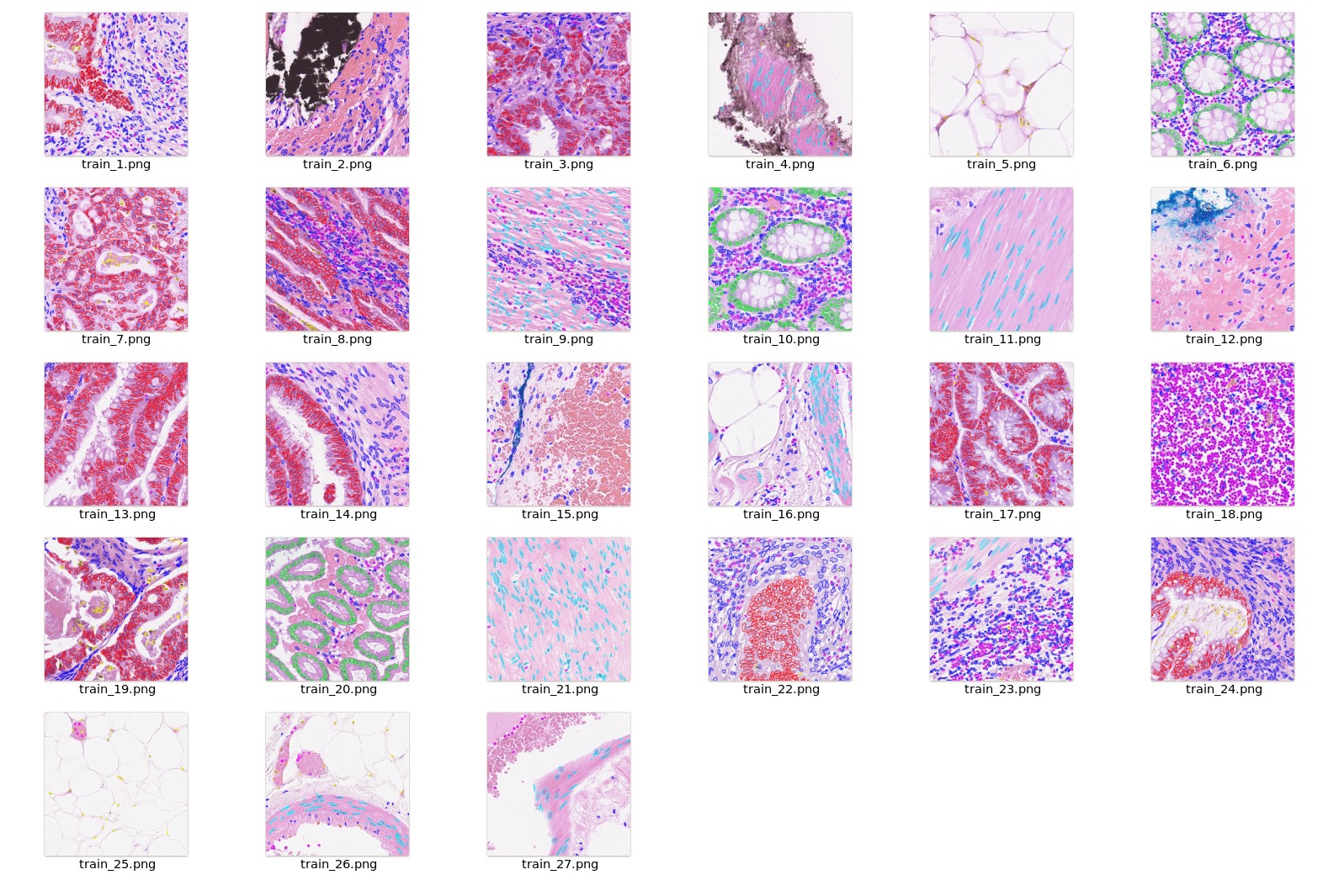

This model is trained using [BasicUNet](https://docs.monai.io/en/latest/networks.html#basicunet) over [ConSeP](https://warwick.ac.uk/fac/cross_fac/tia/data/hovernet) dataset.

|

| 17 |

|

| 18 |

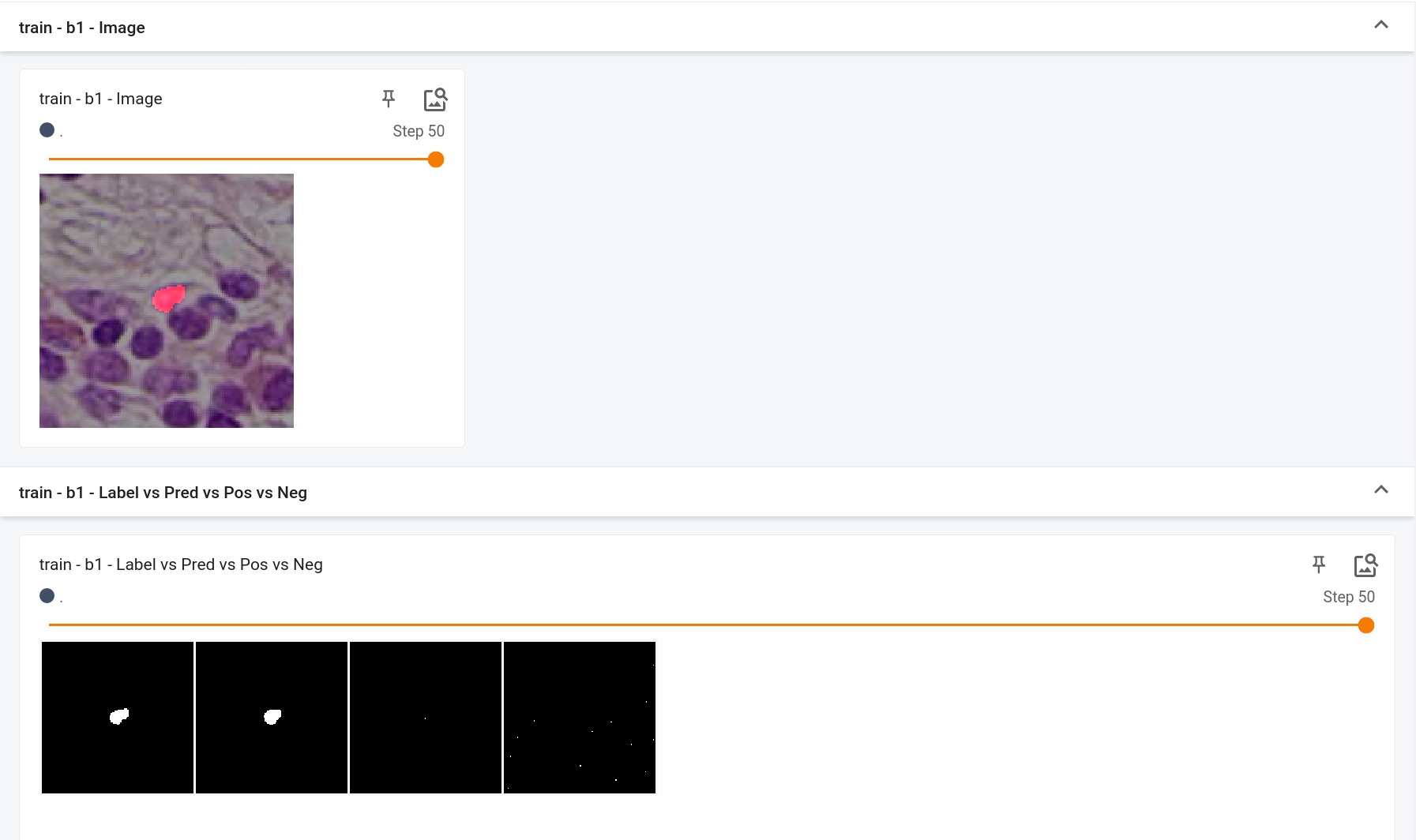

## Data

|

|

@@ -23,17 +22,6 @@ unzip -q consep_dataset.zip

|

|

| 23 |

```

|

| 24 |

<br/>

|

| 25 |

|

| 26 |

-

## Training configuration

|

| 27 |

-

The training was performed with the following:

|

| 28 |

-

|

| 29 |

-

- GPU: at least 12GB of GPU memory

|

| 30 |

-

- Actual Model Input: 5 x 128 x 128

|

| 31 |

-

- AMP: True

|

| 32 |

-

- Optimizer: Adam

|

| 33 |

-

- Learning Rate: 1e-4

|

| 34 |

-

- Loss: DiceLoss

|

| 35 |

-

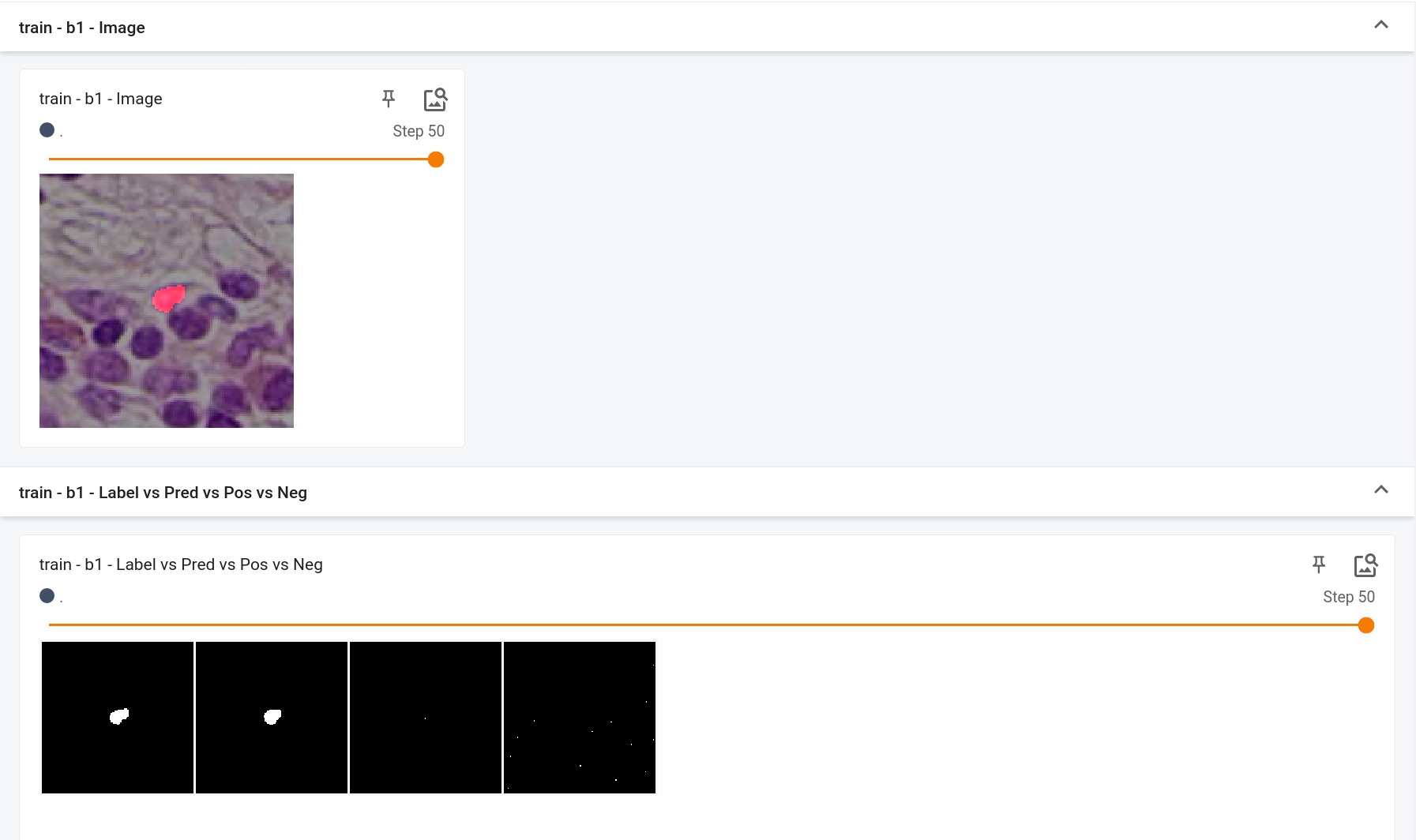

|

| 36 |

-

|

| 37 |

### Preprocessing

|

| 38 |

After [downloading this dataset](https://warwick.ac.uk/fac/cross_fac/tia/data/hovernet/consep_dataset.zip),

|

| 39 |

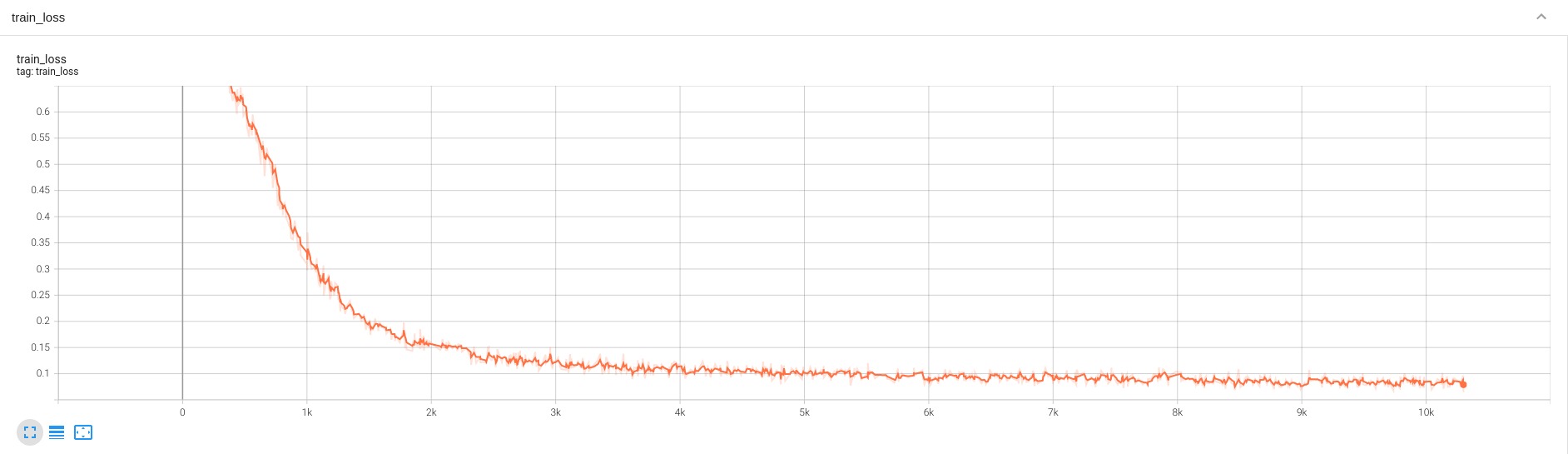

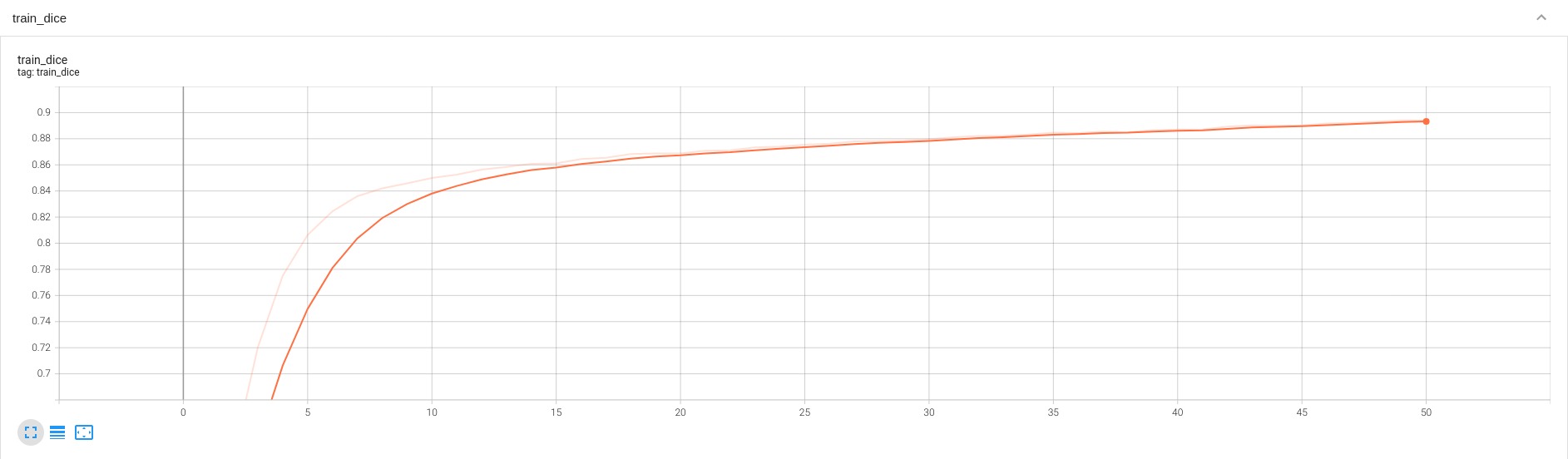

python script `data_process.py` from `scripts` folder can be used to preprocess and generate the final dataset for training.

|

|

@@ -91,33 +79,45 @@ Example dataset.json

|

|

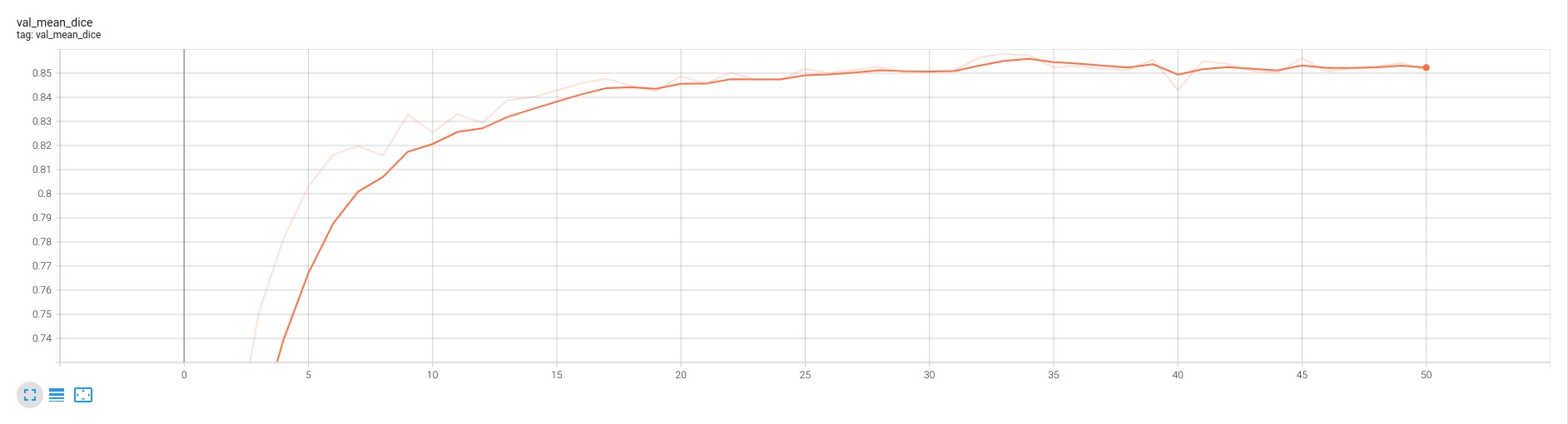

| 91 |

}

|

| 92 |

```

|

| 93 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 94 |

|

| 95 |

-

|

| 96 |

-

|

|

|

|

| 97 |

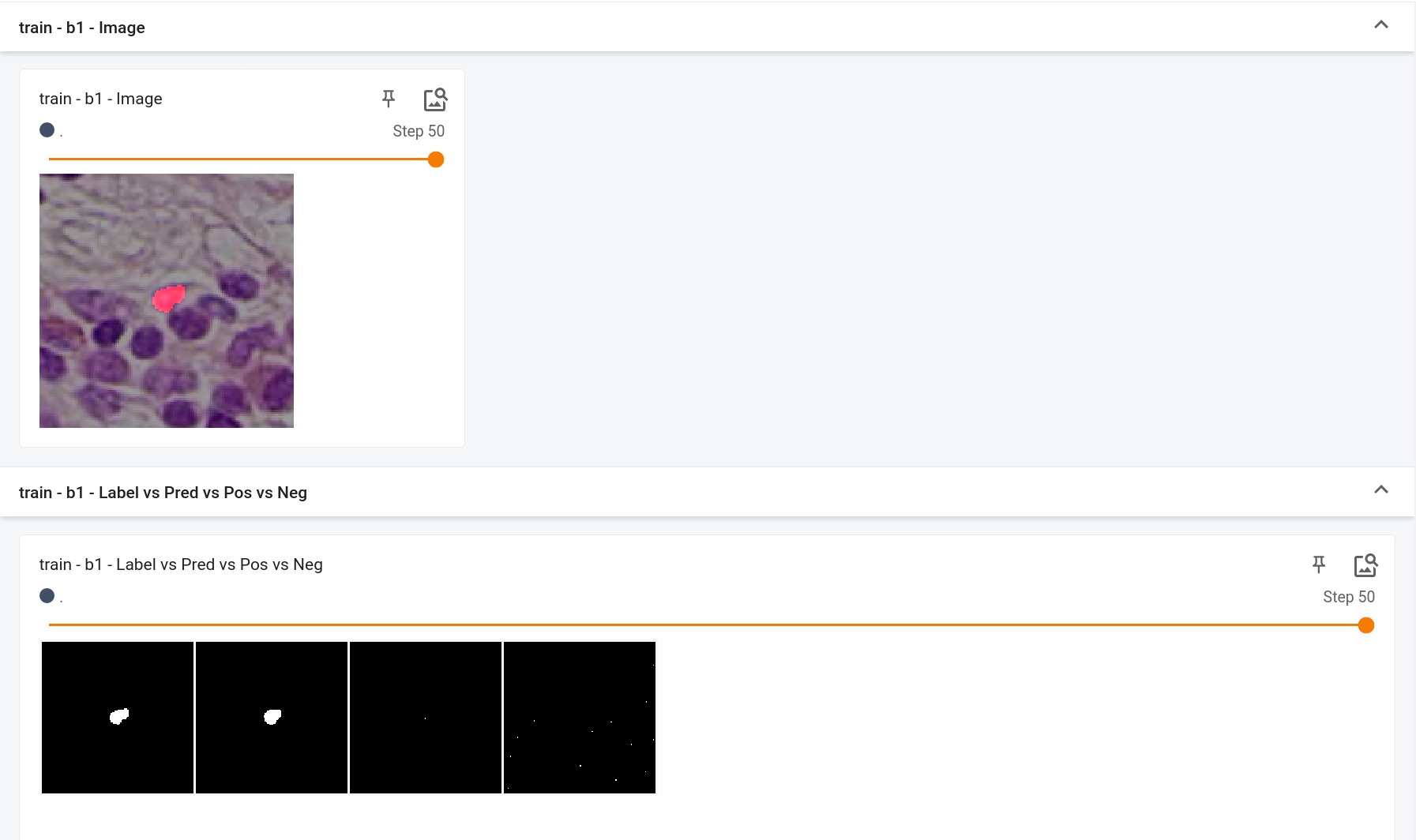

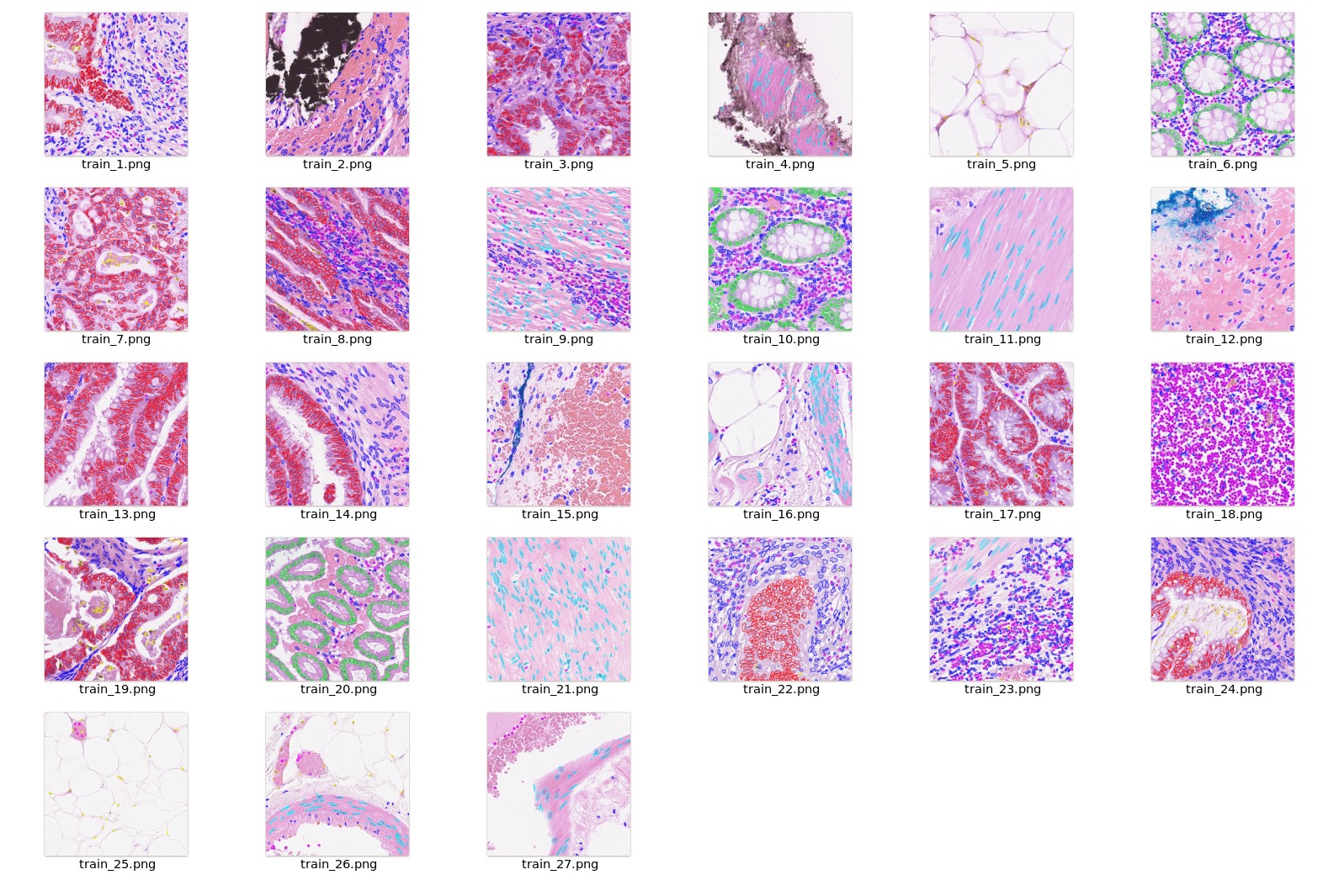

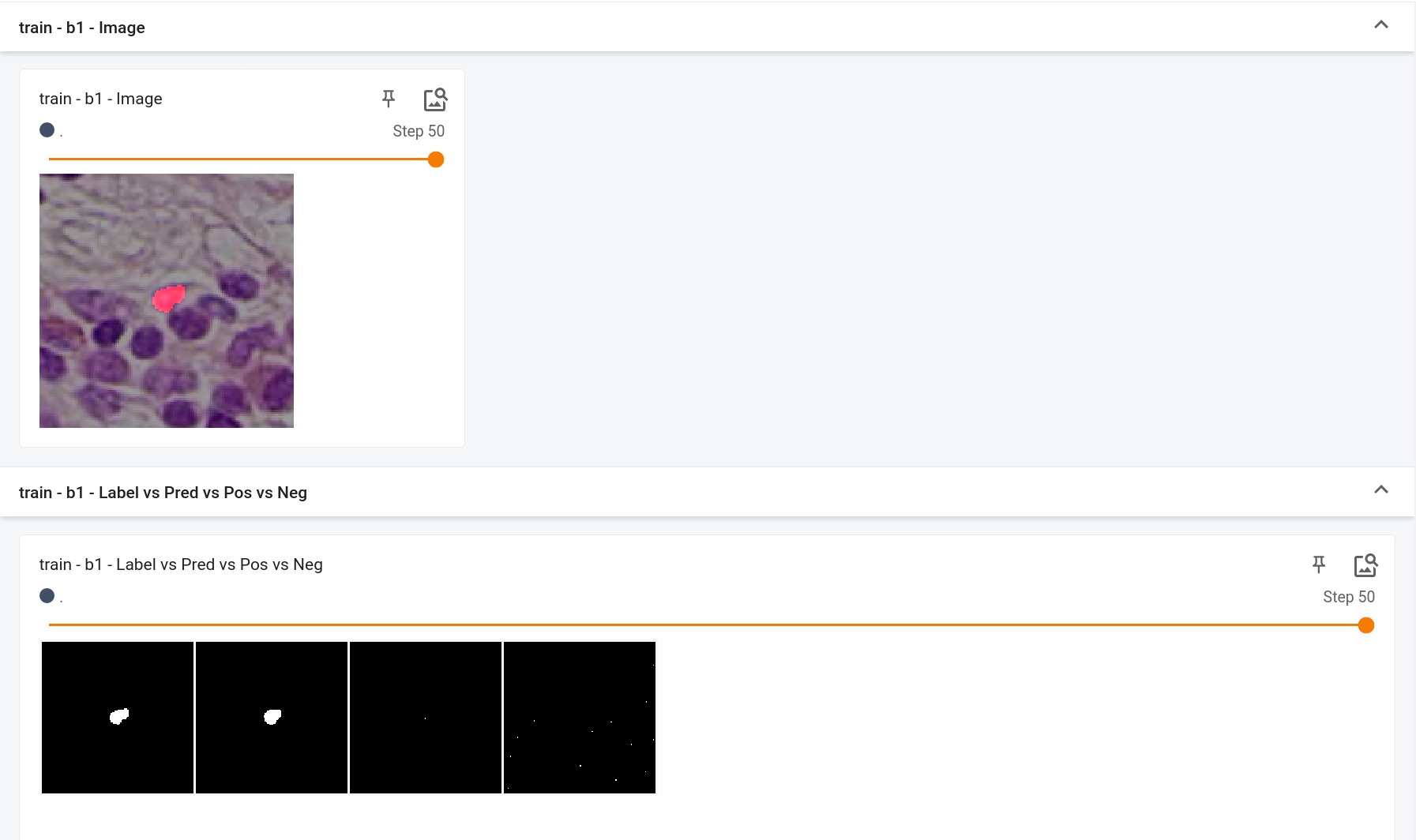

- 3 RGB channels

|

| 98 |

- +ve signal channel (this nuclei)

|

| 99 |

- -ve signal channel (other nuclei)

|

| 100 |

|

| 101 |

-

|

|

|

|

| 102 |

- 0 = Background

|

| 103 |

- 1 = Nuclei

|

| 104 |

|

| 105 |

|

| 106 |

|

| 107 |

-

|

|

|

|

| 108 |

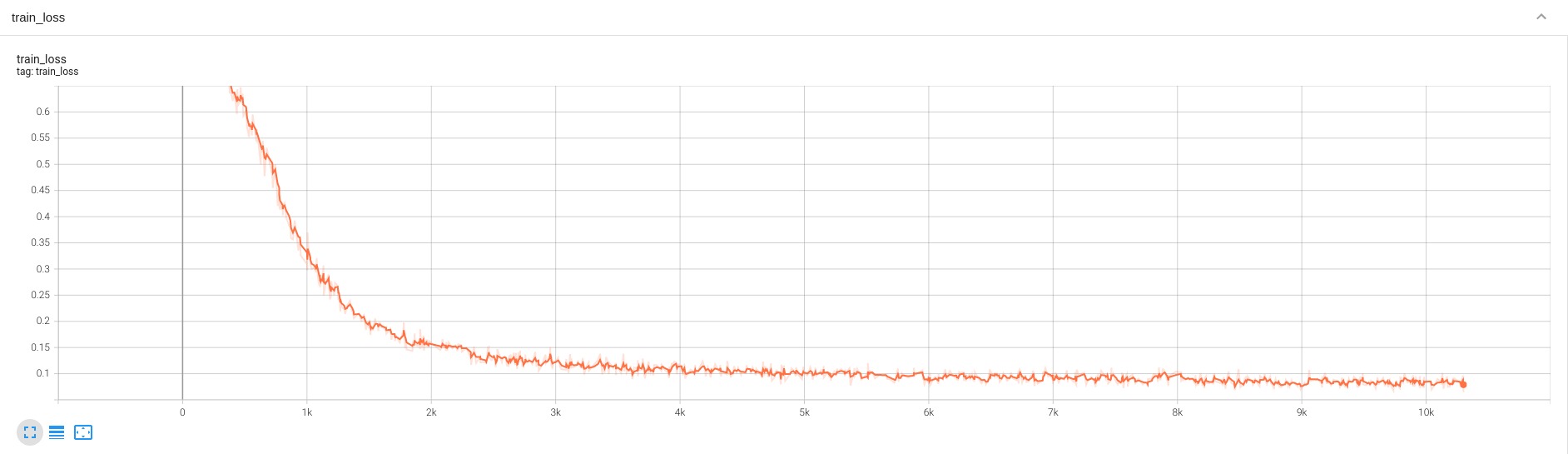

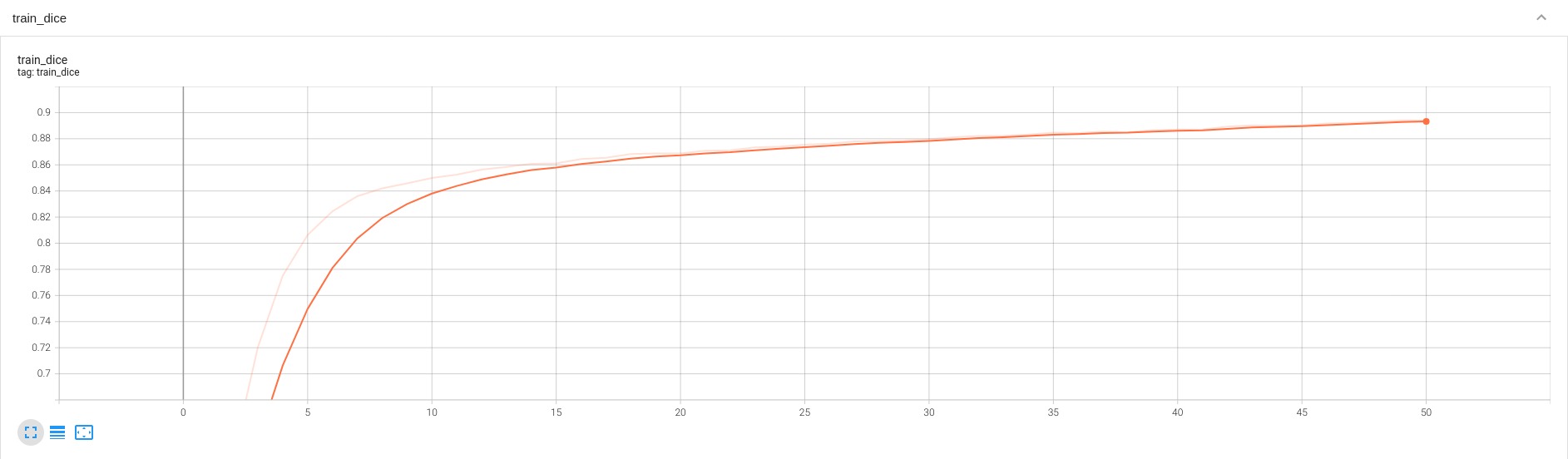

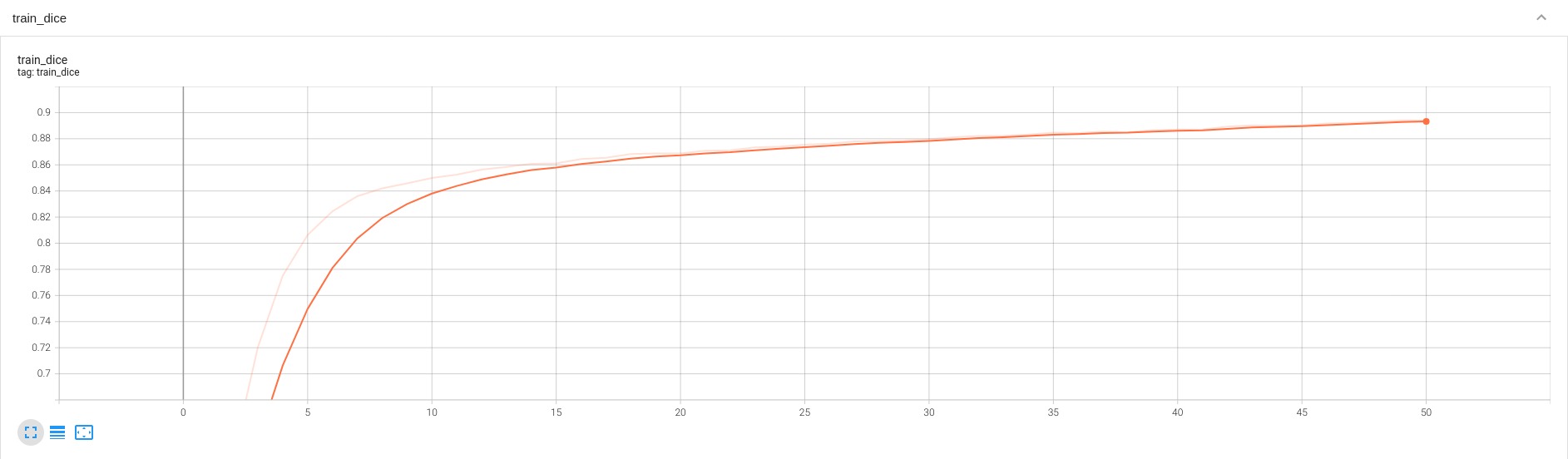

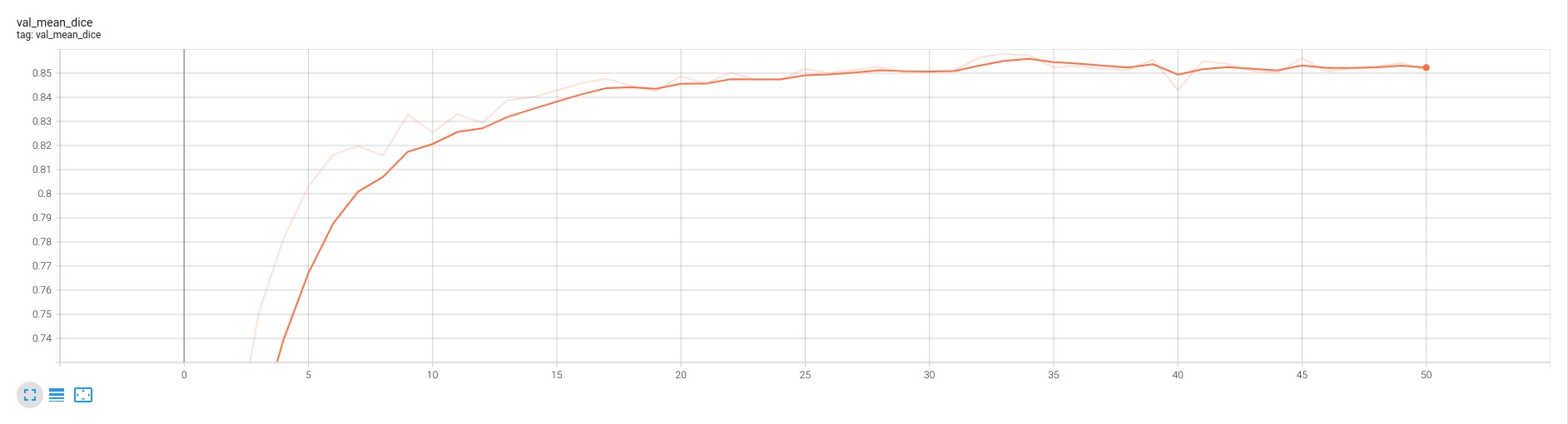

This model achieves the following Dice score on the validation data provided as part of the dataset:

|

| 109 |

|

| 110 |

- Train Dice score = 0.89

|

| 111 |

- Validation Dice score = 0.85

|

| 112 |

|

| 113 |

|

| 114 |

-

|

| 115 |

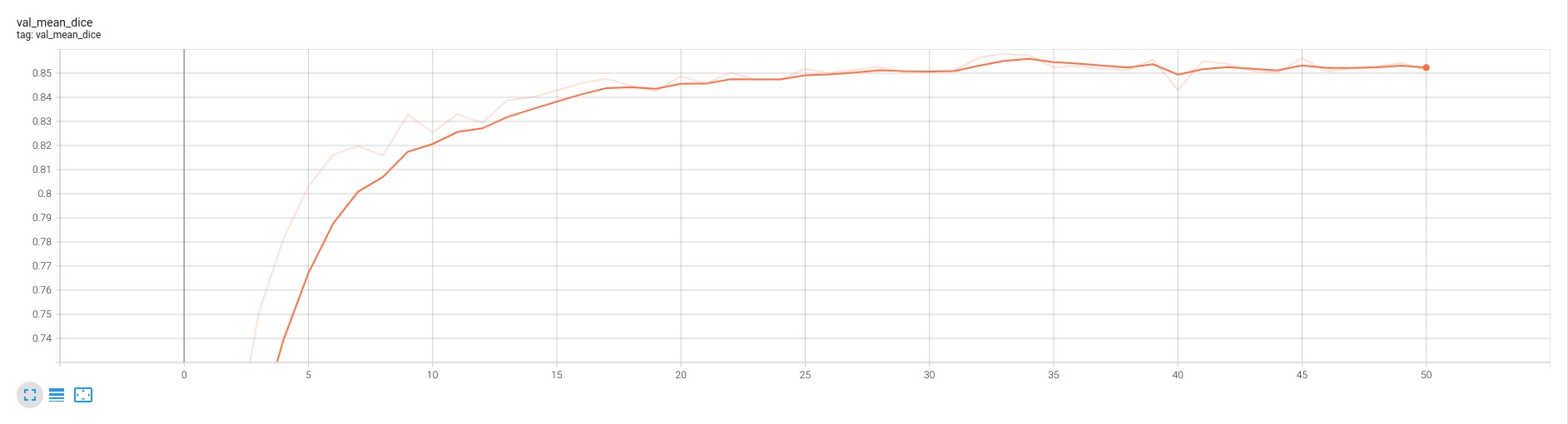

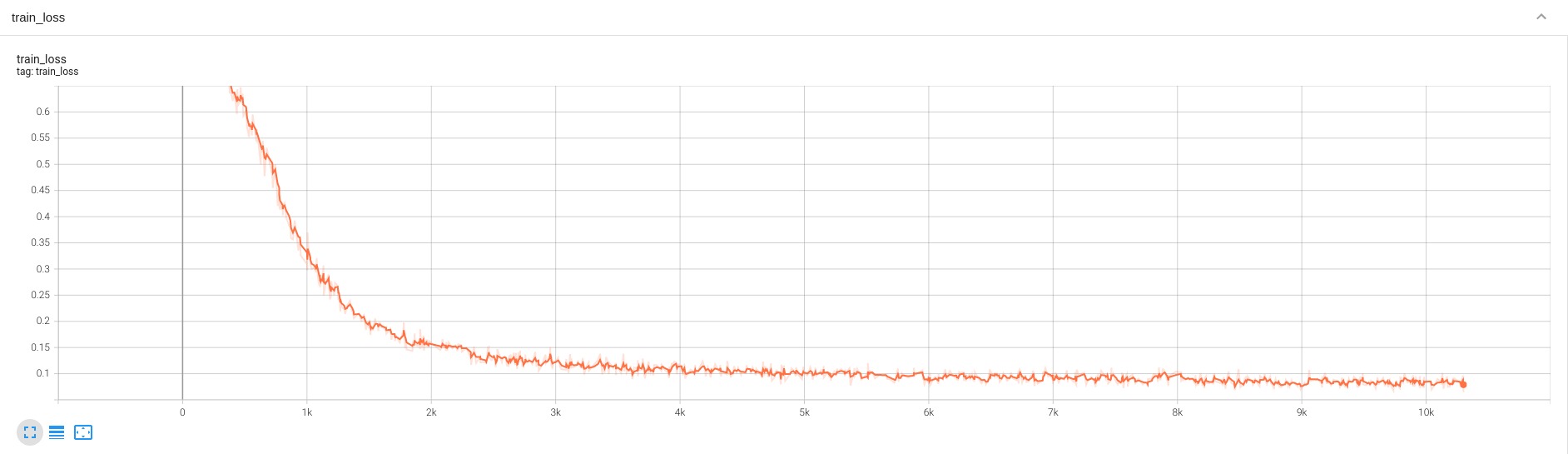

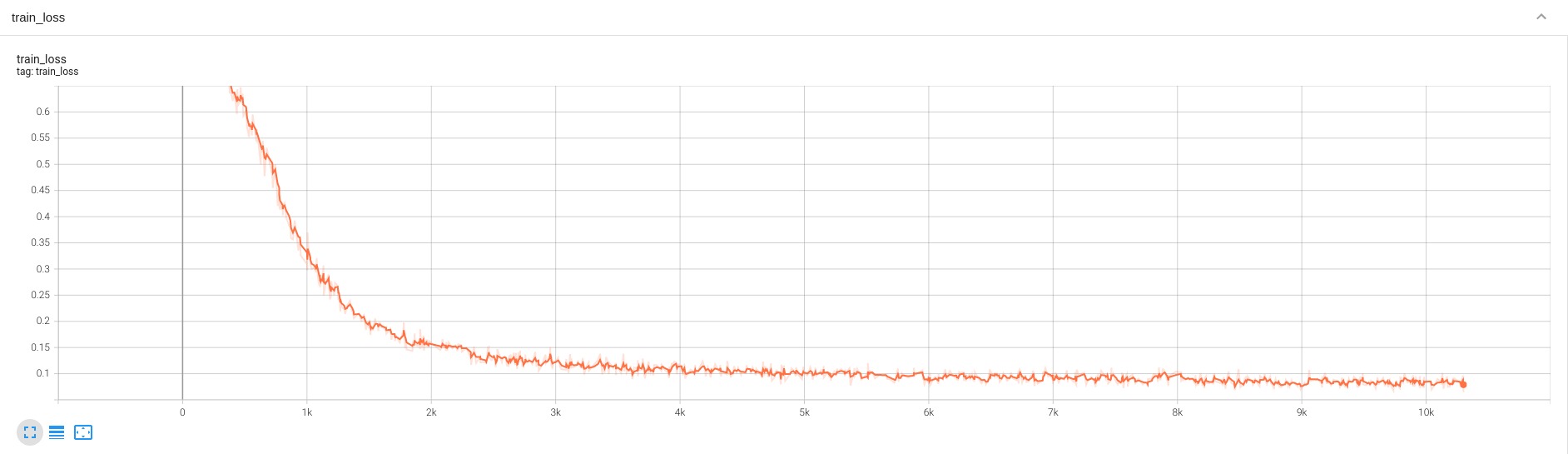

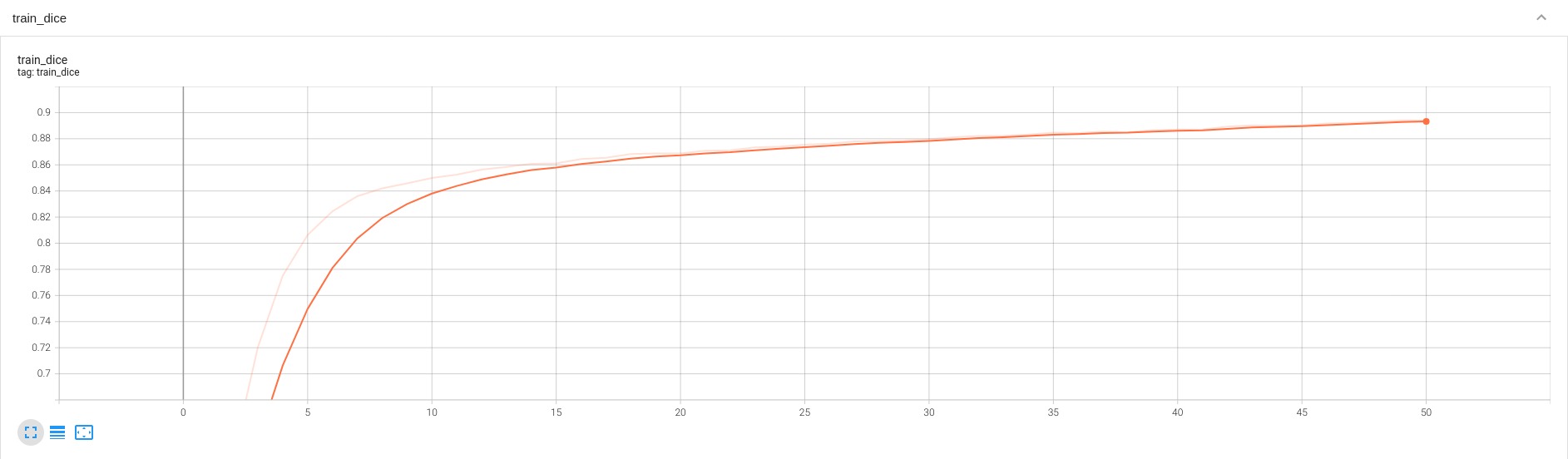

A graph showing the training Loss and Dice over 50 epochs.

|

| 116 |

|

| 117 |

<br>

|

| 118 |

<br>

|

| 119 |

|

| 120 |

-

|

| 121 |

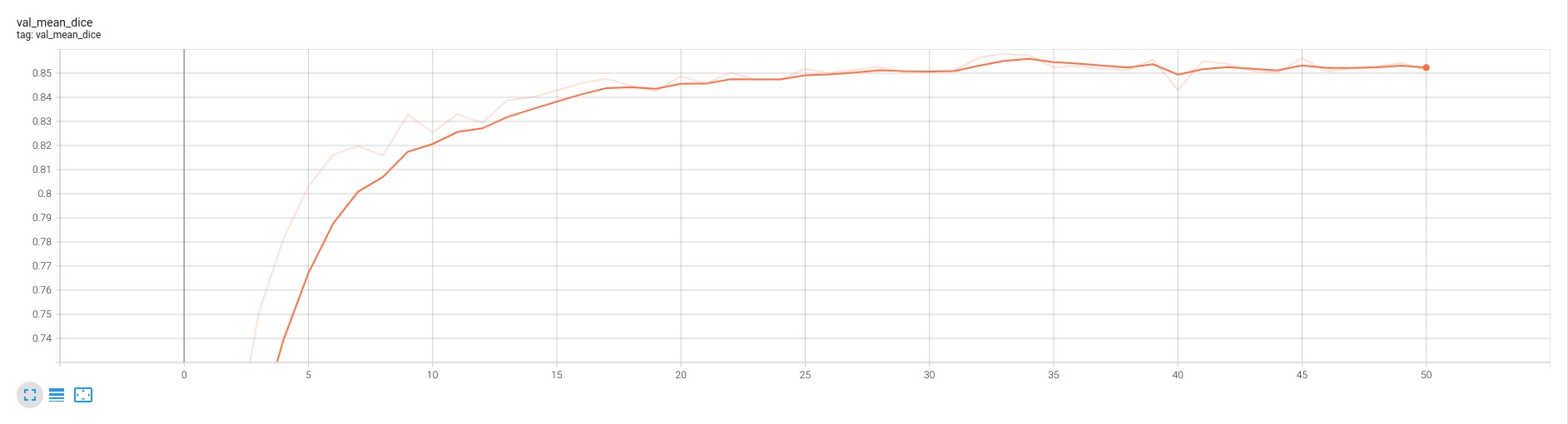

A graph showing the validation mean Dice over 50 epochs.

|

| 122 |

|

| 123 |

<br>

|

|

@@ -140,8 +140,7 @@ python -m monai.bundle run --config_file configs/train.json

|

|

| 140 |

torchrun --standalone --nnodes=1 --nproc_per_node=2 -m monai.bundle run --config_file "['configs/train.json','configs/multi_gpu_train.json']"

|

| 141 |

```

|

| 142 |

|

| 143 |

-

Please note that the distributed training

|

| 144 |

-

Please refer to [pytorch's official tutorial](https://pytorch.org/tutorials/intermediate/ddp_tutorial.html) for more details.

|

| 145 |

|

| 146 |

#### Override the `train` config to execute evaluation with the trained model:

|

| 147 |

|

|

@@ -161,9 +160,6 @@ torchrun --standalone --nnodes=1 --nproc_per_node=2 -m monai.bundle run --config

|

|

| 161 |

python -m monai.bundle run --config_file configs/inference.json

|

| 162 |

```

|

| 163 |

|

| 164 |

-

# Disclaimer

|

| 165 |

-

This is an example, not to be used for diagnostic purposes.

|

| 166 |

-

|

| 167 |

# References

|

| 168 |

[1] Koohbanani, Navid Alemi, et al. "NuClick: a deep learning framework for interactive segmentation of microscopic images." Medical Image Analysis 65 (2020): 101771. https://arxiv.org/abs/2005.14511.

|

| 169 |

|

|

|

|

| 5 |

library_name: monai

|

| 6 |

license: apache-2.0

|

| 7 |

---

|

| 8 |

+

# Model Overview

|

| 9 |

A pre-trained model for segmenting nuclei cells with user clicks/interactions.

|

| 10 |

|

| 11 |

|

| 12 |

|

| 13 |

|

| 14 |

|

|

|

|

| 15 |

This model is trained using [BasicUNet](https://docs.monai.io/en/latest/networks.html#basicunet) over [ConSeP](https://warwick.ac.uk/fac/cross_fac/tia/data/hovernet) dataset.

|

| 16 |

|

| 17 |

## Data

|

|

|

|

| 22 |

```

|

| 23 |

<br/>

|

| 24 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 25 |

### Preprocessing

|

| 26 |

After [downloading this dataset](https://warwick.ac.uk/fac/cross_fac/tia/data/hovernet/consep_dataset.zip),

|

| 27 |

python script `data_process.py` from `scripts` folder can be used to preprocess and generate the final dataset for training.

|

|

|

|

| 79 |

}

|

| 80 |

```

|

| 81 |

|

| 82 |

+

## Training configuration

|

| 83 |

+

The training was performed with the following:

|

| 84 |

+

|

| 85 |

+

- GPU: at least 12GB of GPU memory

|

| 86 |

+

- Actual Model Input: 5 x 128 x 128

|

| 87 |

+

- AMP: True

|

| 88 |

+

- Optimizer: Adam

|

| 89 |

+

- Learning Rate: 1e-4

|

| 90 |

+

- Loss: DiceLoss

|

| 91 |

|

| 92 |

+

|

| 93 |

+

## Input

|

| 94 |

+

5 channels

|

| 95 |

- 3 RGB channels

|

| 96 |

- +ve signal channel (this nuclei)

|

| 97 |

- -ve signal channel (other nuclei)

|

| 98 |

|

| 99 |

+

## Output

|

| 100 |

+

2 channels

|

| 101 |

- 0 = Background

|

| 102 |

- 1 = Nuclei

|

| 103 |

|

| 104 |

|

| 105 |

|

| 106 |

+

|

| 107 |

+

## Performance

|

| 108 |

This model achieves the following Dice score on the validation data provided as part of the dataset:

|

| 109 |

|

| 110 |

- Train Dice score = 0.89

|

| 111 |

- Validation Dice score = 0.85

|

| 112 |

|

| 113 |

|

| 114 |

+

#### Training Loss and Dice

|

| 115 |

A graph showing the training Loss and Dice over 50 epochs.

|

| 116 |

|

| 117 |

<br>

|

| 118 |

<br>

|

| 119 |

|

| 120 |

+

#### Validation Dice

|

| 121 |

A graph showing the validation mean Dice over 50 epochs.

|

| 122 |

|

| 123 |

<br>

|

|

|

|

| 140 |

torchrun --standalone --nnodes=1 --nproc_per_node=2 -m monai.bundle run --config_file "['configs/train.json','configs/multi_gpu_train.json']"

|

| 141 |

```

|

| 142 |

|

| 143 |

+

Please note that the distributed training-related options depend on the actual running environment; thus, users may need to remove `--standalone`, modify `--nnodes`, or do some other necessary changes according to the machine used. For more details, please refer to [pytorch's official tutorial](https://pytorch.org/tutorials/intermediate/ddp_tutorial.html).

|

|

|

|

| 144 |

|

| 145 |

#### Override the `train` config to execute evaluation with the trained model:

|

| 146 |

|

|

|

|

| 160 |

python -m monai.bundle run --config_file configs/inference.json

|

| 161 |

```

|

| 162 |

|

|

|

|

|

|

|

|

|

|

| 163 |

# References

|

| 164 |

[1] Koohbanani, Navid Alemi, et al. "NuClick: a deep learning framework for interactive segmentation of microscopic images." Medical Image Analysis 65 (2020): 101771. https://arxiv.org/abs/2005.14511.

|

| 165 |

|

configs/metadata.json

CHANGED

|

@@ -1,7 +1,8 @@

|

|

| 1 |

{

|

| 2 |

"schema": "https://github.com/Project-MONAI/MONAI-extra-test-data/releases/download/0.8.1/meta_schema_20220324.json",

|

| 3 |

-

"version": "0.0.

|

| 4 |

"changelog": {

|

|

|

|

| 5 |

"0.0.8": "enable deterministic training",

|

| 6 |

"0.0.7": "Update with figure links",

|

| 7 |

"0.0.6": "adapt to BundleWorkflow interface",

|

|

|

|

| 1 |

{

|

| 2 |

"schema": "https://github.com/Project-MONAI/MONAI-extra-test-data/releases/download/0.8.1/meta_schema_20220324.json",

|

| 3 |

+

"version": "0.0.9",

|

| 4 |

"changelog": {

|

| 5 |

+

"0.0.9": "Update README Formatting",

|

| 6 |

"0.0.8": "enable deterministic training",

|

| 7 |

"0.0.7": "Update with figure links",

|

| 8 |

"0.0.6": "adapt to BundleWorkflow interface",

|

docs/README.md

CHANGED

|

@@ -1,11 +1,10 @@

|

|

| 1 |

-

#

|

| 2 |

A pre-trained model for segmenting nuclei cells with user clicks/interactions.

|

| 3 |

|

| 4 |

|

| 5 |

|

| 6 |

|

| 7 |

|

| 8 |

-

# Model Overview

|

| 9 |

This model is trained using [BasicUNet](https://docs.monai.io/en/latest/networks.html#basicunet) over [ConSeP](https://warwick.ac.uk/fac/cross_fac/tia/data/hovernet) dataset.

|

| 10 |

|

| 11 |

## Data

|

|

@@ -16,17 +15,6 @@ unzip -q consep_dataset.zip

|

|

| 16 |

```

|

| 17 |

<br/>

|

| 18 |

|

| 19 |

-

## Training configuration

|

| 20 |

-

The training was performed with the following:

|

| 21 |

-

|

| 22 |

-

- GPU: at least 12GB of GPU memory

|

| 23 |

-

- Actual Model Input: 5 x 128 x 128

|

| 24 |

-

- AMP: True

|

| 25 |

-

- Optimizer: Adam

|

| 26 |

-

- Learning Rate: 1e-4

|

| 27 |

-

- Loss: DiceLoss

|

| 28 |

-

|

| 29 |

-

|

| 30 |

### Preprocessing

|

| 31 |

After [downloading this dataset](https://warwick.ac.uk/fac/cross_fac/tia/data/hovernet/consep_dataset.zip),

|

| 32 |

python script `data_process.py` from `scripts` folder can be used to preprocess and generate the final dataset for training.

|

|

@@ -84,33 +72,45 @@ Example dataset.json

|

|

| 84 |

}

|

| 85 |

```

|

| 86 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 87 |

|

| 88 |

-

|

| 89 |

-

|

|

|

|

| 90 |

- 3 RGB channels

|

| 91 |

- +ve signal channel (this nuclei)

|

| 92 |

- -ve signal channel (other nuclei)

|

| 93 |

|

| 94 |

-

|

|

|

|

| 95 |

- 0 = Background

|

| 96 |

- 1 = Nuclei

|

| 97 |

|

| 98 |

|

| 99 |

|

| 100 |

-

|

|

|

|

| 101 |

This model achieves the following Dice score on the validation data provided as part of the dataset:

|

| 102 |

|

| 103 |

- Train Dice score = 0.89

|

| 104 |

- Validation Dice score = 0.85

|

| 105 |

|

| 106 |

|

| 107 |

-

|

| 108 |

A graph showing the training Loss and Dice over 50 epochs.

|

| 109 |

|

| 110 |

<br>

|

| 111 |

<br>

|

| 112 |

|

| 113 |

-

|

| 114 |

A graph showing the validation mean Dice over 50 epochs.

|

| 115 |

|

| 116 |

<br>

|

|

@@ -133,8 +133,7 @@ python -m monai.bundle run --config_file configs/train.json

|

|

| 133 |

torchrun --standalone --nnodes=1 --nproc_per_node=2 -m monai.bundle run --config_file "['configs/train.json','configs/multi_gpu_train.json']"

|

| 134 |

```

|

| 135 |

|

| 136 |

-

Please note that the distributed training

|

| 137 |

-

Please refer to [pytorch's official tutorial](https://pytorch.org/tutorials/intermediate/ddp_tutorial.html) for more details.

|

| 138 |

|

| 139 |

#### Override the `train` config to execute evaluation with the trained model:

|

| 140 |

|

|

@@ -154,9 +153,6 @@ torchrun --standalone --nnodes=1 --nproc_per_node=2 -m monai.bundle run --config

|

|

| 154 |

python -m monai.bundle run --config_file configs/inference.json

|

| 155 |

```

|

| 156 |

|

| 157 |

-

# Disclaimer

|

| 158 |

-

This is an example, not to be used for diagnostic purposes.

|

| 159 |

-

|

| 160 |

# References

|

| 161 |

[1] Koohbanani, Navid Alemi, et al. "NuClick: a deep learning framework for interactive segmentation of microscopic images." Medical Image Analysis 65 (2020): 101771. https://arxiv.org/abs/2005.14511.

|

| 162 |

|

|

|

|

| 1 |

+

# Model Overview

|

| 2 |

A pre-trained model for segmenting nuclei cells with user clicks/interactions.

|

| 3 |

|

| 4 |

|

| 5 |

|

| 6 |

|

| 7 |

|

|

|

|

| 8 |

This model is trained using [BasicUNet](https://docs.monai.io/en/latest/networks.html#basicunet) over [ConSeP](https://warwick.ac.uk/fac/cross_fac/tia/data/hovernet) dataset.

|

| 9 |

|

| 10 |

## Data

|

|

|

|

| 15 |

```

|

| 16 |

<br/>

|

| 17 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 18 |

### Preprocessing

|

| 19 |

After [downloading this dataset](https://warwick.ac.uk/fac/cross_fac/tia/data/hovernet/consep_dataset.zip),

|

| 20 |

python script `data_process.py` from `scripts` folder can be used to preprocess and generate the final dataset for training.

|

|

|

|

| 72 |

}

|

| 73 |

```

|

| 74 |

|

| 75 |

+

## Training configuration

|

| 76 |

+

The training was performed with the following:

|

| 77 |

+

|

| 78 |

+

- GPU: at least 12GB of GPU memory

|

| 79 |

+

- Actual Model Input: 5 x 128 x 128

|

| 80 |

+

- AMP: True

|

| 81 |

+

- Optimizer: Adam

|

| 82 |

+

- Learning Rate: 1e-4

|

| 83 |

+

- Loss: DiceLoss

|

| 84 |

|

| 85 |

+

|

| 86 |

+

## Input

|

| 87 |

+

5 channels

|

| 88 |

- 3 RGB channels

|

| 89 |

- +ve signal channel (this nuclei)

|

| 90 |

- -ve signal channel (other nuclei)

|

| 91 |

|

| 92 |

+

## Output

|

| 93 |

+

2 channels

|

| 94 |

- 0 = Background

|

| 95 |

- 1 = Nuclei

|

| 96 |

|

| 97 |

|

| 98 |

|

| 99 |

+

|

| 100 |

+

## Performance

|

| 101 |

This model achieves the following Dice score on the validation data provided as part of the dataset:

|

| 102 |

|

| 103 |

- Train Dice score = 0.89

|

| 104 |

- Validation Dice score = 0.85

|

| 105 |

|

| 106 |

|

| 107 |

+

#### Training Loss and Dice

|

| 108 |

A graph showing the training Loss and Dice over 50 epochs.

|

| 109 |

|

| 110 |

<br>

|

| 111 |

<br>

|

| 112 |

|

| 113 |

+

#### Validation Dice

|

| 114 |

A graph showing the validation mean Dice over 50 epochs.

|

| 115 |

|

| 116 |

<br>

|

|

|

|

| 133 |

torchrun --standalone --nnodes=1 --nproc_per_node=2 -m monai.bundle run --config_file "['configs/train.json','configs/multi_gpu_train.json']"

|

| 134 |

```

|

| 135 |

|

| 136 |

+

Please note that the distributed training-related options depend on the actual running environment; thus, users may need to remove `--standalone`, modify `--nnodes`, or do some other necessary changes according to the machine used. For more details, please refer to [pytorch's official tutorial](https://pytorch.org/tutorials/intermediate/ddp_tutorial.html).

|

|

|

|

| 137 |

|

| 138 |

#### Override the `train` config to execute evaluation with the trained model:

|

| 139 |

|

|

|

|

| 153 |

python -m monai.bundle run --config_file configs/inference.json

|

| 154 |

```

|

| 155 |

|

|

|

|

|

|

|

|

|

|

| 156 |

# References

|

| 157 |

[1] Koohbanani, Navid Alemi, et al. "NuClick: a deep learning framework for interactive segmentation of microscopic images." Medical Image Analysis 65 (2020): 101771. https://arxiv.org/abs/2005.14511.

|

| 158 |

|