Datasets:

Update README.md

Browse files

README.md

CHANGED

|

@@ -3,7 +3,15 @@ configs:

|

|

| 3 |

- config_name: default

|

| 4 |

data_files:

|

| 5 |

- split: test

|

| 6 |

-

path:

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 7 |

---

|

| 8 |

|

| 9 |

# Dataset Card for LSDBench: Long-video Sampling Dilemma Benchmark

|

|

@@ -12,4 +20,22 @@ configs:

|

|

| 12 |

|

| 13 |

A benchmark that focuses on the sampling dilemma in long-video tasks. Through well-designed tasks, it evaluates the sampling efficiency of long-video VLMs.

|

| 14 |

|

| 15 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 3 |

- config_name: default

|

| 4 |

data_files:

|

| 5 |

- split: test

|

| 6 |

+

path: mc_qa_annotations_1300.json

|

| 7 |

+

license: apache-2.0

|

| 8 |

+

task_categories:

|

| 9 |

+

- visual-question-answering

|

| 10 |

+

language:

|

| 11 |

+

- en

|

| 12 |

+

pretty_name: lsdbench

|

| 13 |

+

size_categories:

|

| 14 |

+

- 1K<n<10K

|

| 15 |

---

|

| 16 |

|

| 17 |

# Dataset Card for LSDBench: Long-video Sampling Dilemma Benchmark

|

|

|

|

| 20 |

|

| 21 |

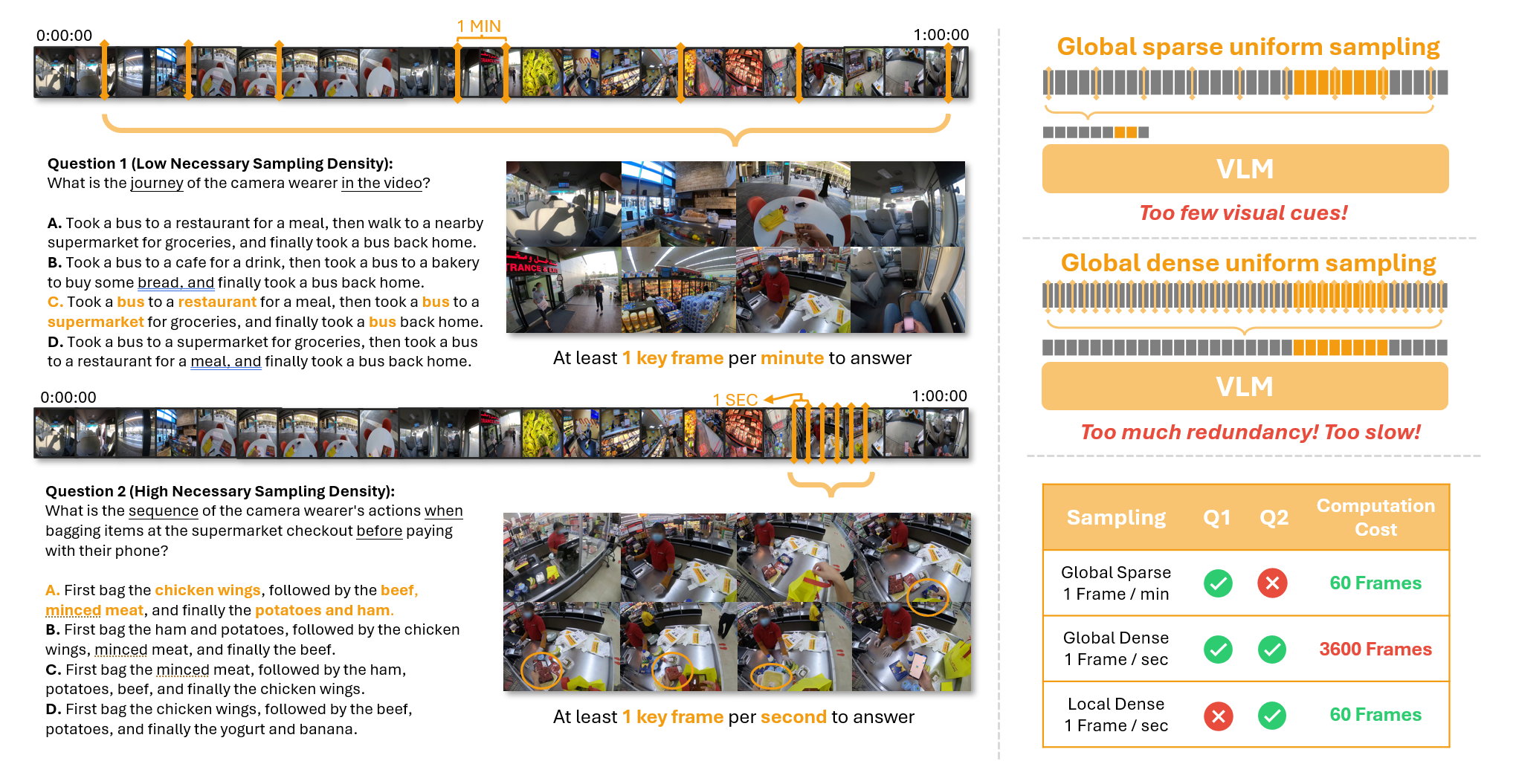

A benchmark that focuses on the sampling dilemma in long-video tasks. Through well-designed tasks, it evaluates the sampling efficiency of long-video VLMs.

|

| 22 |

|

| 23 |

+

Arxiv Paper: [📖 Does Your Vision-Language Model Get Lost in the Long Video Sampling Dilemma?](https://arxiv.org/abs/2503.12496)

|

| 24 |

+

|

| 25 |

+

Github : [https://github.com/dvlab-research/LSDBench](https://github.com/dvlab-research/LSDBench)

|

| 26 |

+

|

| 27 |

+

***(Left)** In Q1, identifying a camera wearer's visited locations requires analyzing the entire video. However, key frames are sparse, so sampling one frame per minute often provides enough information. In contrast, Q2 examines the packing order during checkout, requiring high-resolution sampling to capture rapid actions. **(Right)** **Sampling Dilemma** emerges in tasks like Q2: a low sampling density fails to provide sufficient visual cues for accurate answers, while a high sampling density results in redundant frames, significantly slowing inference speed. This challenge underscores the need for adaptive sampling strategies, especially for tasks with high necessary sampling density.*

|

| 28 |

+

|

| 29 |

+

## LSDBench

|

| 30 |

+

|

| 31 |

+

The LSDBench dataset is designed to evaluate the sampling efficiency of long-video VLMs. It consists of multiple-choice question-answer pairs based on hour-long videos, focusing on short-duration actions with high Necessary Sampling Density (NSD).

|

| 32 |

+

|

| 33 |

+

* **Number of QA Pairs:** 1304

|

| 34 |

+

* **Number of Videos:** 400

|

| 35 |

+

* **Average Video Length:** 45.39 minutes (ranging from 20.32 to 115.32 minutes)

|

| 36 |

+

* **Average Target Segment Duration:** 3 minutes

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

## Evaluation on LSDBench

|

| 40 |

+

|

| 41 |

+

Please see our [github repo](https://github.com/dvlab-research/LSDBench) for detailed evaluation guide.

|